Standing up to our ears

Of all my five senses, the one I trust the least is my hearing. My ears give me some fantastically useful and entertaining information yet all the while they sneak little falsehoods and bias into my mind. It’s like they say “The Brooklyn Bridge is 486.3 meters long, it spans the East River from Manhatten to Brooklyn and having falling on hard times we can sell you it for the low price of $500k”. I would be a fool to take everything these tricky lobed appendages have to say at face value. I am speaking in a strictly technical sense of course, I am not losing my mind (yet).

Our hearing is remarkably varied from person to person. Our ability to discern minimal differences in tone and volume level or recall details about audio over even short times is quite fickle indeed. The shape of our ears are at least as unique as fingerprints. When a study is done of an aspect of human hearing the sample is large, as many people as they can reasonably get. Steps are taken to eliminate the possibility of suggestion, to validate that the test subjects are actually discerning and not just guessing, to eliminate environmental factors from skewing the results. I suggest that this should become part of personal practice for discerning audio professionals and consumers.

Testing should always include some controls. In medical testing a placebo is introduced as a control. Since often the devices being tested in an audio setting are integral to the reproduction it is impossible to present a completely unaffected placebo signal, you can’t play silence to someone and call it music. You can do the next best thing, test if the listener can reliably tell the difference between the products. If they can’t then the whole exercise is completed. The user may as well pick the device with the price or appearance they like since they will not otherwise be able to tell the difference. A simple test preliminary test provides a nice control. If two products are being tested then employ four test signals, two are the two products (A and B) and the other two are the products again (X and Y). By listening to A and B followed by X and Y the listener can choose that either A is X and B is Y or that A is Y and B is X. Repeat the test ten times or so randomly switching the pairs and if the listener does not get 8 (80%) or more correct it’s highly probable that they are guessing. Here is Jan’s ABX Test from Sieveking Sound. It’s a great and entertaining online illustration of this exact type of test.

With the exception of social experiments the testers generally are not allowed to interact with each other during the test. This is for obvious reasons. People influence each other with what they say, how one sighs or groans, even the expressions on the face can communicate clearly what one thinks. What I’m advocating is using a double blind or ABX format for personal evaluation as a first line of defense against our own auditory confusion. In this respect there is no possibility of coercion because the tester will only be fooling themselves and this is exactly what the ABX test is designed to combat. You will know right away if the difference will be significant enough to be worth the cost.

Consider the “Audibility of a CD-Standard A/DA/A Loop Inserted into High-Resolution Audio Playback” research project by Moran and Meyer. In this study the 60 participants were tested a total of 554 times in an attempt to determine whether any of them could reliably tell the difference between a recording at SACD (Super Audio Compact Disc) quality and regular CD quality. Not a single person at any point in the study was able to conclusively distinguish between the two. The authors were shooting for a 95% accuracy as conclusive. It was no surprise that the audiophile and studio professionals group got the best score, the surprising part was that in 467 trials they got 256 correct answers. That’s 52.2%. The best result of the entire study was 8 out of 10 and that only happened once. The next best were two 7 out of 10 scores. Testers were invited to as many tests as they liked and were given the option to comparatively listen as many times as they wanted before committing to a choice. The results have been hotly debated in audiophile circles but have not to my knowledge yet been challenged or replicated (the authors welcome and encourage either outcome).

Consider also this test of multi-channel digital audio converters (equipment more on the professional end of things) found in the journal Sound on Sound. The sample is a much smaller group of 4 and they are testing 4 comparable pieces of gear. The test is set up in a double-blind fashion where the listeners and the tester don’t know which choice corresponds with which gear. To me this is where the test goes a bit off the rails. First of all there is no control in place, there is no X option to test whether the listeners can reliably distinguish between the choices. Their minds can reasonably assume that with 4 pieces of gear tested and 4 choices on the test they should be hearing a difference every time. Second it appears that all the testers were administered the test at the same time. It should be said that this test was not attempting to be a publishable scientific study so in all fairness they have done a pretty decent job. It’s interesting that in the article a double mentioned emphasis is placed on how little difference the listeners noticed between the devices. Add an ABX component to this sort of test and I suspect things will start to get very interesting.

A personal ABX test allows us to reconcile in our own listening spaces the hard facts of what differences we can actually appreciate with our notions of what gear might make our audio sound better. Hopefully this concept produces results that we can then act on in full confidence without elevating or diminishing the opinions of others beyond what they should be. When this happens most of the white noise in gear reviews and forum chatter becomes just that.

ABC/HR is a good utility for comparing existing audio files on a windows system and is rumored to work on Mac systems as well.

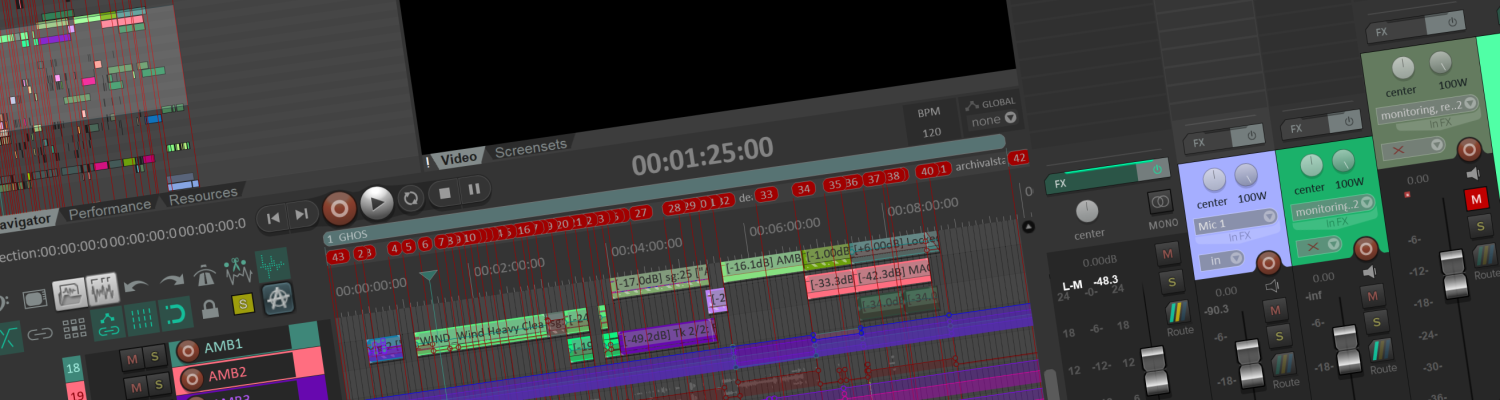

Reaper has an ABX tester in it’s JS Plugin library that allows for testing of audio streams from external devices as well and it runs on both Mac and Windows.

An excellent and detailed article addressing this subject in support of a larger argument involving digital audio sampling rates and bit depth.

http://xiph.org/~xiphmont/demo/neil-young.html

1 Response